iOS 5 Potential and Accessibility Features for Classrooms

I am participating with the Apple Academy this week held in Cupertino. While I have learned much in just the first day, I will share two things that I look forward to for classroom implementation: potential iOS 5 features and the current iOS 4 accessibility features.

Let’s start with the future: while I have been following articles about iOS 5 features, we learned about two potential ones that had slipped the cracks in my reading. One involves Airplay, Apple’s service to push an iPad screen wirelessly to an Apple TV to display on a large screen. Did you experience the disappointment with the original iPad when you discovered only a small number of apps could be pushed to a projector through their dongle? It is the same with AipPlay in its current state–only a small number of apps are made to push content, which is too limiting to consider implementing in most classroom settings. But we learned that iOS 5 is slated to mirror all screen content to AirPlay. This is huge for classrooms! I have spent many hours presenting with the iPad, and the dongle is a problem if you want to stand at all–to the point I was ready to pull out the roll of duct tape! The ability to move around the room, to hand your iPad to anyone in the room, or (in a 1:1 setting) project anyone’s iPad screen opens a whole realm of possibilities for collaborative instructional practices. One problem, I assume, will be the HDMI-out port of the Apple TV–most older projects only have the VGA port. It may mean replacing projectors. Too bad Apple can’t put a mini-Displayport in the AppleTV.

Another potential iOS 5 feature is text-to-speech, mysteriously absent in most iPad apps, particularly when you can convert voice to text (Dragon Dictation), convert audio input to text and back to audio for translation (Google Translate), and convert visual text translations (Word Lens). Knowtilus is one of the few apps I have found to implement text-to-speech. If this feature does appear in the next iOS update, making text-to-speech available to any word processing or any text-based app, it will be a great boon for young readers and writers–the more I think about it, actually, all writers. Now, Apple, if you could address the most mysterious missing feature–converting handwriting-to-text–it’s not like that hasn’t been tackled in past solutions (e.g. Newton)…

Now let’s move to current capabilities. We had an excellent demonstration from Sarah Herrlinger at Apple on accessibility features of Maicintosh and iOS 4. For years, I have kept an eye on accessibility technologies not only because they often give a prelude to future features for all technologies, but also because they add capabilities for all people, not just special needs. I had looked at the accessibility features of the original iPad, but found them too cumbersome to be easily used. Sarah showed today Apple has made great strides that I had missed.

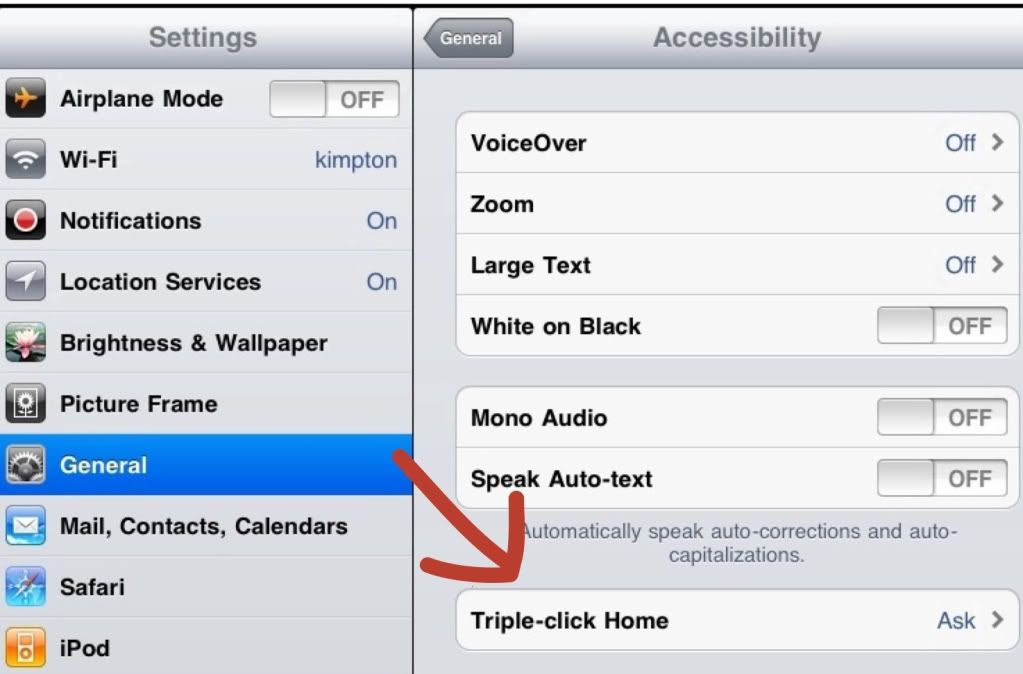

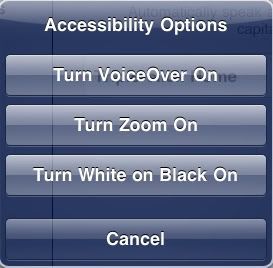

The biggest change to make it useable is the ability to quickly turn it on and off with a home button triple click:

This is particularly useful as a presenter or in an environment where iPads are shared. The zoom feature will be one I use as a presenter now that they have the triple click. While the Voiceover capability is tempting for text-to-speech capabilities, the changes in user interface may make it cumbersome if all you are looking for is a simple read-back of student writing.

I had turned off auto-correct long ago as I had sent too many email messages with laughable phrases that did not make sense. The Speak Autotext will coerce me to resurrect that feature and try it out again.

FYI, on the Mac side, Sarah also showed using the Services option under the application menu. With selected text, you can “Summarize” a document–a very interesting feature. Another service option is “Add to iTunes as a Spoken Track.” You may have to choose Service Preferences to turn these features on. These are both features I plan to incorporate into tech integration classes with teachers.

Sarah shared some results from Canby, Oregon that found students performed better on tests since the iPad implementation. My immediate skeptic radar went off, as it is difficult to link direct effects with education. But her point really stuck a note with me–iPads can be much more effective if teachers differentiate instruction. This very strongly corresponds with my personal experience–tablets and smartphones are very personal machines. I think the lesson here is: shared iPads, or even making all students always do the same lockstep tasks on all iPads at the same time will be less effective. Teachers need to utilize the mass array of features to differentiate student use of the devices. I have tried to make this case before, but she gave me a greater urgency to hit this point home with teachers.